~www_lesswrong_com | Bookmarks (697)

-

Supermen of the (Not so Far) Future — LessWrong

Published on May 2, 2025 3:55 PM GMTDespite being fairly well established as a discipline, genetics...

-

AI Welfare Risks — LessWrong

Published on May 2, 2025 5:49 PM GMTMy paper "AI Welfare Risks" has been accepted for...

-

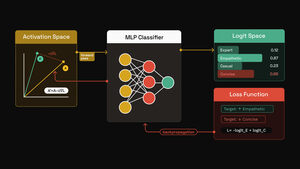

Steering Language Models in Multiple Directions Simultaneously — LessWrong

Published on May 2, 2025 3:27 PM GMTNarmeen developed, ideated and validated K-steering at Martian. Luke...

-

RA x ControlAI video: What if AI just keeps getting smarter? — LessWrong

Published on May 2, 2025 2:19 PM GMTThe video is about extrapolating the future of AI...

-

OpenAI Preparedness Framework 2.0 — LessWrong

Published on May 2, 2025 1:10 PM GMTRight before releasing o3, OpenAI updated its Preparedness Framework...

-

Ex-OpenAI employee amici leave to file denied in Musk v OpenAI case? — LessWrong

Published on May 2, 2025 12:27 PM GMTSeveral ex-employees of OpenAI filed an amicus brief in...

-

The Continuum Fallacy and its Relatives — LessWrong

Published on May 2, 2025 2:58 AM GMTNote: I didn't write this essay, nor do I...

-

Roads are at maximum efficiency always — LessWrong

Published on May 2, 2025 10:29 AM GMTOn a theoretical road, the number of cars traveling...

-

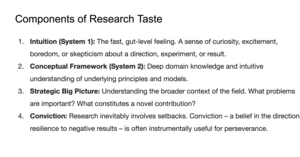

My Research Process: Understanding and Cultivating Research Taste — LessWrong

Published on May 1, 2025 11:08 PM GMTThis is post 3 of a sequence on my...

-

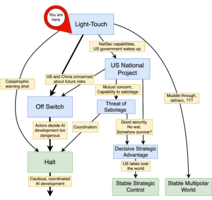

AI Governance to Avoid Extinction: The Strategic Landscape and Actionable Research Questions — LessWrong

Published on May 1, 2025 10:46 PM GMTWe’re excited to release a new AI governance research...

-

AI #114: Liars, Sycophants and Cheaters — LessWrong

Published on May 1, 2025 2:00 PM GMTGemini 2.5 Pro is sitting in the corner, sulking....

-

Slowdown After 2028: Compute, RLVR Uncertainty, MoE Data Wall — LessWrong

Published on May 1, 2025 1:54 PM GMTIt'll take until ~2050 to repeat the level of...

-

Anthropomorphizing AI might be good, actually — LessWrong

Published on May 1, 2025 1:50 PM GMTIt is often noted that anthropomorphizing AI can be...

-

Dont focus on updating P doom — LessWrong

Published on May 1, 2025 11:10 AM GMTMotivation: Improving group epistemics.TL; DR (Changes to) P doom/alignment...

-

Prioritizing Work — LessWrong

Published on May 1, 2025 2:00 AM GMT I recently read a blog post that concluded...

-

Don't rely on a "race to the top" — LessWrong

Published on May 1, 2025 12:33 AM GMTTo make frontier AI safe enough, we need to...

-

Meta-Technicalities: Safeguarding Values in Formal Systems — LessWrong

Published on April 30, 2025 11:43 PM GMTFormal Systems Create CoordinationWe live in a mesh of...

-

Obstacles in ARC's agenda: Finding explanations — LessWrong

Published on April 30, 2025 11:03 PM GMTAs an employee of the European AI Office, it's...

-

GPT-4o Responds to Negative Feedback — LessWrong

Published on April 30, 2025 8:20 PM GMTWhoops. Sorry everyone. Rolling back to a previous version.Here’s...

-

State of play of AI progress (and related brakes on an intelligence explosion) [Linkpost] — LessWrong

Published on April 30, 2025 7:58 PM GMTThis time around, I'm sharing a post on Interconnects...

-

Misrepresentation as a Barrier for Interp (Part I) — LessWrong

Published on April 29, 2025 5:07 PM GMTJohn: So there’s this thing about interp, where most...

-

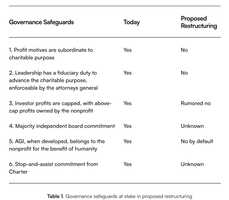

AISN #53: An Open Letter Attempts to Block OpenAI Restructuring — LessWrong

Published on April 29, 2025 4:13 PM GMTWelcome to the AI Safety Newsletter by the Center...

-

What could Alphafold 4 look like? — LessWrong

Published on April 29, 2025 3:45 PM GMTI made another biology-ML podcast! Two hours long, deeply...

-

Sealed Computation: Towards Low-Friction Proof of Locality — LessWrong

Published on April 29, 2025 3:26 PM GMTInference CertificatesAs a prerequisite for the virtuality.network, we need...