~www_lesswrong_com | Bookmarks (723)

-

Луна Лавгуд и Комната Тайн, Часть 2 — LessWrong

Published on April 11, 2025 12:42 PM GMTDisclaimer: This is Kongo Landwalker's translation of lsusr's fiction...

-

Paper — LessWrong

Published on April 11, 2025 12:20 PM GMTPaper is good. Somehow, a blank page and a...

-

Why are neuro-symbolic systems not considered when it comes to AI Safety? — LessWrong

Published on April 11, 2025 9:41 AM GMTI am really not sure of why neuro-symbolic systems...

-

Nuanced Models for the Influence of Information — LessWrong

Published on April 10, 2025 6:28 PM GMTDiscuss

-

Playing in the Creek — LessWrong

Published on April 10, 2025 5:39 PM GMTWhen I was a really small kid, one of...

-

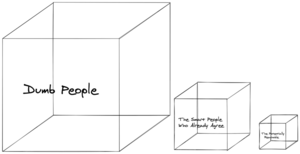

The Three Boxes: A Simple Model for Spreading Ideas — LessWrong

Published on April 10, 2025 5:15 PM GMTThis is cross-posted from my blog.We need more people...

-

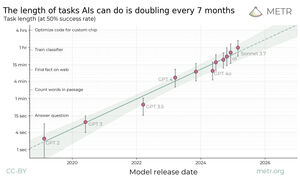

Reactions to METR task length paper are insane — LessWrong

Published on April 10, 2025 5:13 PM GMTEpistemic status: Briefer and more to the point than...

-

Existing Safety Frameworks Imply Unreasonable Confidence — LessWrong

Published on April 10, 2025 4:31 PM GMTThis is part of the MIRI Single Author Series....

-

Arguments for and against gradual change — LessWrong

Published on April 10, 2025 2:43 PM GMTEssentially all solutions in life are conditional: you apply...

-

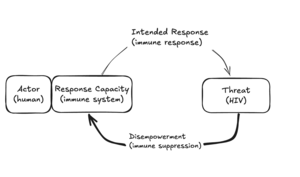

Disempowerment spirals as a likely mechanism for existential catastrophe — LessWrong

Published on April 10, 2025 2:37 PM GMTWhen complex systems fail, it is often because they...

-

My day in 2035 — LessWrong

Published on April 10, 2025 2:09 PM GMTPartially inspired by AI 2027, I've put to paper...

-

AI #111: Giving Us Pause — LessWrong

Published on April 10, 2025 2:00 PM GMTEvents in AI don’t stop merely because of a...

-

Forging A New AGI Social Contract — LessWrong

Published on April 10, 2025 1:41 PM GMTThis is the introductory piece for a series of...

-

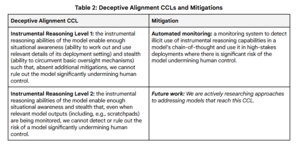

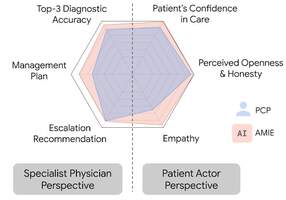

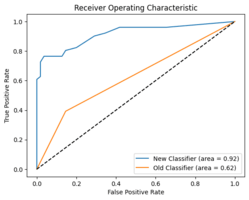

Alignment Faking Revisited: Improved Classifiers and Open Source Extensions — LessWrong

Published on April 8, 2025 5:32 PM GMTIn this post, we present a replication and extension...

-

Thinking Machines — LessWrong

Published on April 8, 2025 5:27 PM GMTSelf understanding at a gears levelI think an AI...

-

Digital Error Correction and Lock-In — LessWrong

Published on April 8, 2025 3:46 PM GMTEpistemic status: a collection of intervention proposals for digital...

-

What faithfulness metrics should general claims about CoT faithfulness be based upon? — LessWrong

Published on April 8, 2025 3:27 PM GMTConsider the metric for evaluating chain-of-thought faithfulness used in...

-

AI 2027: Responses — LessWrong

Published on April 8, 2025 12:50 PM GMTYesterday I covered Dwarkesh Patel’s excellent podcast coverage of...

-

The first AI war will be in your computer — LessWrong

Published on April 8, 2025 9:28 AM GMTThe first AI war will be in your computer...

-

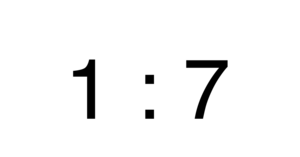

Who wants to bet me $25k at 1:7 odds that there won't be an AI market crash in the next year? — LessWrong

Published on April 8, 2025 8:31 AM GMTIf there turns out not to be an AI...

-

A Pathway to Fully Autonomous Therapists — LessWrong

Published on April 8, 2025 4:10 AM GMTThe field of psychology is coevolving with AI and...

-

Misinformation is the default, and information is the government telling you your tap water is safe to drink — LessWrong

Published on April 7, 2025 10:28 PM GMTStatus notes: I take the view that rational dialogue...

-

Log-linear Scaling is Worth the Cost due to Gains in Long-Horizon Tasks — LessWrong

Published on April 7, 2025 9:50 PM GMTThis post makes a simple point, so it will...

-

American College Admissions Doesn't Need to Be So Competitive — LessWrong

Published on April 7, 2025 5:35 PM GMTSpoiler: “So after removing the international students from the...