~www_lesswrong_com | Bookmarks (713)

-

Do No Harm? Navigating and Nudging AI Moral Choices — LessWrong

Published on February 6, 2025 7:18 PM GMTTL;DR: How do AI systems make moral decisions, and...

-

Open Philanthropy Technical AI Safety RFP - $40M Available Across 21 Research Areas — LessWrong

Published on February 6, 2025 6:58 PM GMTOpen Philanthropy is launching a big new Request for Proposals...

-

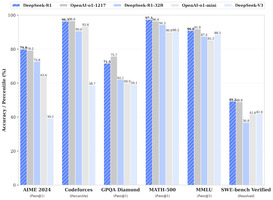

AISN #47: Reasoning Models — LessWrong

Published on February 6, 2025 6:52 PM GMTWelcome to the AI Safety Newsletter by the Center...

-

Wild Animal Suffering Is The Worst Thing In The World — LessWrong

Published on February 6, 2025 4:15 PM GMTCrossposted from my blog which many people are saying...

-

Detecting Strategic Deception Using Linear Probes — LessWrong

Published on February 6, 2025 3:46 PM GMTCan you tell when an LLM is lying from...

-

Alignment Paradox and a Request for Harsh Criticism — LessWrong

Published on February 5, 2025 6:17 PM GMTI’m not a scientist, engineer, or alignment researcher in...

-

Introducing International AI Governance Alliance (IAIGA) — LessWrong

Published on February 5, 2025 4:02 PM GMTThe International AI Governance Alliance (IAIGA) is a new...

-

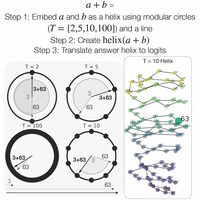

Language Models Use Trigonometry to Do Addition — LessWrong

Published on February 5, 2025 1:50 PM GMTI (Subhash) am a Masters student in the Tegmark...

-

Reviewing LessWrong: Screwtape's Basic Answer — LessWrong

Published on February 5, 2025 4:30 AM GMTYeah I put this off until the last day,...

-

Journalism student looking for sources — LessWrong

Published on February 4, 2025 6:58 PM GMTHello Lesswrong community,I am a journalism student doing my...

-

Nick Land: Orthogonality — LessWrong

Published on February 4, 2025 9:07 PM GMTEditor's note Due to the interest aroused by @jessicata's posts...

-

Subjective Naturalism in Decision Theory: Savage vs. Jeffrey–Bolker — LessWrong

Published on February 4, 2025 8:34 PM GMTSummary:This post outlines how a view we call subjective...

-

Anti-Slop Interventions? — LessWrong

Published on February 4, 2025 7:50 PM GMTIn his recent post arguing against AI Control research,...

-

We’re in Deep Research — LessWrong

Published on February 4, 2025 5:20 PM GMTThe latest addition to OpenAI’s Pro offerings is their...

-

The Capitalist Agent — LessWrong

Published on February 4, 2025 3:32 PM GMTWith the ongoing evolutions in “artificial intelligence”, of course...

-

Forecasting AGI: Insights from Prediction Markets and Metaculus — LessWrong

Published on February 4, 2025 1:03 PM GMTI have tried to find all prediction market and...

-

Ruling Out Lookup Tables — LessWrong

Published on February 4, 2025 10:39 AM GMTThis post was written during Alex Altair's agent foundations...

-

Half-baked idea: a straightforward method for learning environmental goals? — LessWrong

Published on February 4, 2025 6:56 AM GMTEpistemic status: I want to propose a method of...

-

Information Versus Action — LessWrong

Published on February 4, 2025 5:13 AM GMTYou can get a clearer view of what's going...

-

Utilitarian AI Alignment: Building a Moral Assistant with the Constitutional AI Method — LessWrong

Published on February 4, 2025 4:15 AM GMT AbstractThis post presents a project from the AI Safety...

-

Tear Down the Burren — LessWrong

Published on February 4, 2025 3:40 AM GMT I love the Burren. It hosts something like...

-

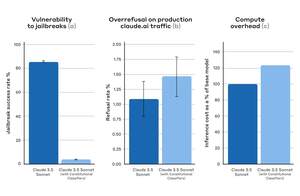

Constitutional Classifiers: Defending against universal jailbreaks (Anthropic Blog) — LessWrong

Published on February 4, 2025 2:55 AM GMTExcerpt below. Follow the link for the full post.In...

-

Can someone, anyone, make superintelligence a more concrete concept? — LessWrong

Published on February 4, 2025 2:18 AM GMTWhat especially worries me about artificial intelligence is that...

-

Gradual Disempowerment, Shell Games and Flinches — LessWrong

Published on February 2, 2025 2:47 PM GMTOver the past year and half, I've had numerous...