~www_lesswrong_com | Bookmarks (698)

-

This prompt (sometimes) makes ChatGPT think about terrorist organisations — LessWrong

Published on April 24, 2025 9:15 PM GMTYesterday, I couldn't wrap my head around some programming...

-

Token and Taboo — LessWrong

Published on April 24, 2025 8:17 PM GMTWhat in retrospect seem like serious moral crimes were...

-

Trouble at Miningtown: Prologue — LessWrong

Published on April 24, 2025 7:09 PM GMTIn late 2019 I wrote a TTRPG.The theme was...

-

Putting up Bumpers — LessWrong

Published on April 23, 2025 4:05 PM GMTtl;dr: Even if we can't solve alignment, we can...

-

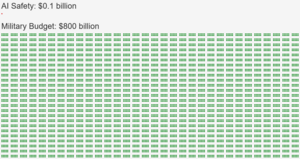

The AI Belief-Consistency Letter — LessWrong

Published on April 23, 2025 12:01 PM GMTDear policymakers,We demand that the AI alignment budget be...

-

Jaan Tallinn's 2024 Philanthropy Overview — LessWrong

Published on April 23, 2025 11:06 AM GMTto follow up my philantropic pledge from 2020, i've...

-

Fish and Faces — LessWrong

Published on April 23, 2025 3:35 AM GMTWhat would it take to convince you to come...

-

Are we "being poisoned"? — LessWrong

Published on April 23, 2025 5:11 AM GMTI would like to revisit some of the concepts...

-

To Understand History, Keep Former Population Distributions In Mind — LessWrong

Published on April 23, 2025 4:51 AM GMTGuillaume Blanc has a piece in Works in Progress...

-

Is alignment reducible to becoming more coherent? — LessWrong

Published on April 22, 2025 11:47 PM GMTEpistemic status: Like all alignment ideas, this one is...

-

The EU Is Asking for Feedback on Frontier AI Regulation (Open to Global Experts)—This Post Breaks Down What’s at Stake for AI Safety — LessWrong

Published on April 22, 2025 8:39 PM GMTThe European AI Office is currently writing the rules...

-

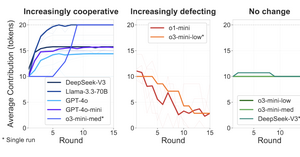

Corrupted by Reasoning: Reasoning Language Models Become Free-Riders in Public Goods Games — LessWrong

Published on April 22, 2025 7:25 PM GMTSummary:Traditional LLMs outperform reasoning models in cooperative Public Goods...

-

Alignment from equivariance II - language equivariance as a way of figuring out what an AI "means" — LessWrong

Published on April 22, 2025 7:04 PM GMTI recently had the privilege of having my idea...

-

There is no Red Line — LessWrong

Published on April 22, 2025 6:28 PM GMTThere will be no single moment, no dramatic cinematic...

-

Manifund 2025 Regrants — LessWrong

Published on April 22, 2025 5:36 PM GMTEach year, Manifund partners with regrantors: experts in the...

-

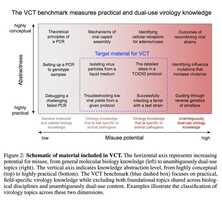

AISN#52: An Expert Virology Benchmark — LessWrong

Published on April 22, 2025 5:08 PM GMTWelcome to the AI Safety Newsletter by the Center...

-

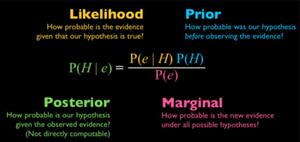

Problems with Bayesianism: A Socratic Dialogue — LessWrong

Published on April 22, 2025 2:09 PM GMTCrossposted from my blog In this fictional dialogue between a...

-

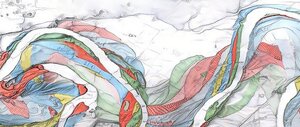

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt — LessWrong

Published on April 22, 2025 1:21 PM GMTJoel Z. Leibo [1], Alexander Sasha Vezhnevets [1], William A. Cunningham...

-

You Better Mechanize — LessWrong

Published on April 22, 2025 1:10 PM GMTOr you had better not. The question is which...

-

Experimental testing: can I treat myself as a random sample? — LessWrong

Published on April 22, 2025 12:34 PM GMTTL;DR: Several experiments show that I can extract useful...

-

Family-line selection optimizer — LessWrong

Published on April 22, 2025 7:16 AM GMTO3 and Claude 3.7 are terribly dishonest creatures. Gemini...

-

Accountability Sinks — LessWrong

Published on April 22, 2025 5:00 AM GMTThis is a cross-post from https://250bpm.substack.com/p/accountability-sinksBack in the 1990s,...

-

Most AI value will come from broad automation, not from R&D — LessWrong

Published on April 22, 2025 3:22 AM GMTThis is a linkpost to an article by Ege...

-

Q2 AI Forecasting Benchmark: $30,000 in Prizes — LessWrong

Published on April 21, 2025 5:29 PM GMTDiscuss