~www_lesswrong_com | Bookmarks (698)

-

On Responsibility — LessWrong

Published on January 21, 2025 10:47 AM GMTMy view on the concept of responsibility has shifted...

-

The Gentle Romance — LessWrong

Published on January 19, 2025 6:29 PM GMTA story I wrote about living through the transition...

-

Is theory good or bad for AI safety? — LessWrong

Published on January 19, 2025 10:32 AM GMTWe choose to go to the moon in this...

-

What's the Right Way to think about Information Theoretic quantities in Neural Networks? — LessWrong

Published on January 19, 2025 8:04 AM GMTTl;dr, Neural networks are deterministic and sometimes even reversible,...

-

Per Tribalismum ad Astra — LessWrong

Published on January 19, 2025 6:50 AM GMTCapitalism is powered by greed. People want to make...

-

Shut Up and Calculate: Gambling, Divination, and the Abacus as Tantra — LessWrong

Published on January 19, 2025 3:03 AM GMTTHERE ARE LAKES at the bottom of the ocean....

-

Five Recent AI Tutoring Studies — LessWrong

Published on January 19, 2025 3:53 AM GMTLast week some results were released from a 6-week...

-

be the person that makes the meeting productive — LessWrong

Published on January 18, 2025 10:32 PM GMTHow many times have you been in a meeting...

-

Well-being in the mind, and its implications for utilitarianism — LessWrong

Published on January 18, 2025 3:32 PM GMTWhen learning about classic utilitarianism (approximately, the quest to...

-

How likely is AGI to force us all to be happy forever? (much like in the Three Worlds Collide novel) — LessWrong

Published on January 18, 2025 3:39 PM GMTHi, everyone. I'm not sure if my post is...

-

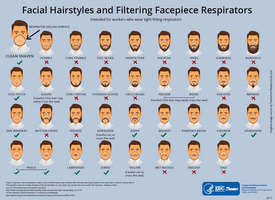

Beards and Masks? — LessWrong

Published on January 18, 2025 4:00 PM GMT In general, you're not supposed to wear a...

-

[Exercise] Two Examples of Noticing Confusion — LessWrong

Published on January 18, 2025 3:29 PM GMTConfusion is a felt sense; a bodily sensation you...

-

Scaling Wargaming for Global Catastrophic Risks with AI — LessWrong

Published on January 18, 2025 3:10 PM GMTWe’re developing an AI-enabled wargaming-tool, grim, to significantly scale...

-

Alignment ideas — LessWrong

Published on January 18, 2025 12:43 PM GMTepistemic status: I know next to nothing about evolution,...

-

Don’t ignore bad vibes you get from people — LessWrong

Published on January 18, 2025 9:20 AM GMTI think a lot of people have heard so...

-

Renormalization Redux: QFT Techniques for AI Interpretability — LessWrong

Published on January 18, 2025 3:54 AM GMTIntroduction: Why QFT?In a previous post, Lauren offered a...

-

Your AI Safety focus is downstream of your AGI timeline — LessWrong

Published on January 17, 2025 9:24 PM GMTCross-posted from SubstackFeeling intellectually understimulated, I've begun working my...

-

What are the plans for solving the inner alignment problem? — LessWrong

Published on January 17, 2025 9:45 PM GMTInner Alignment is the problem of ensuring mesa-optimizers (i.e....

-

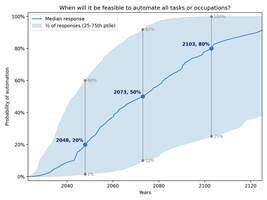

Experts' AI timelines are longer than you have been told? — LessWrong

Published on January 16, 2025 6:03 PM GMTThis is a linkpost for How should we analyse...

-

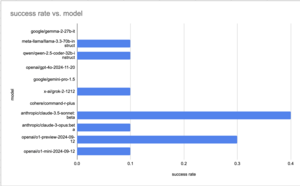

Numberwang: LLMs Doing Autonomous Research, and a Call for Input — LessWrong

Published on January 16, 2025 5:20 PM GMTSummaryCan LLMs science? The answer to this question can...

-

Topological Debate Framework — LessWrong

Published on January 16, 2025 5:19 PM GMTI would like to thank Professor Vincent Conitzer, Caspar...

-

AI #99: Farewell to Biden — LessWrong

Published on January 16, 2025 2:20 PM GMTThe fun, as it were, is presumably about to...

-

Deceptive Alignment and Homuncularity — LessWrong

Published on January 16, 2025 1:55 PM GMTNB this dialogue occurred at the very end of...

-

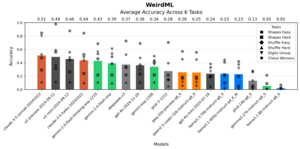

Introducing the WeirdML Benchmark — LessWrong

Published on January 16, 2025 11:38 AM GMTWeirdML websiteRelated posts:How good are LLMs at doing ML...