~www_lesswrong_com | Bookmarks (702)

-

Moderately More Than You Wanted To Know: Depressive Realism — LessWrong

Published on January 13, 2025 2:57 AM GMTDepressive realism is the idea that depressed people have...

-

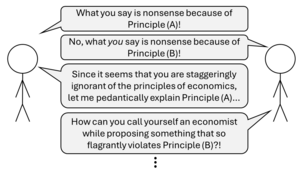

Applying traditional economic thinking to AGI: a trilemma — LessWrong

Published on January 13, 2025 1:23 AM GMTTraditional economics thinking has two strong principles, each based...

-

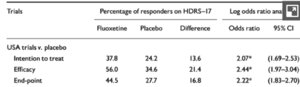

Do Antidepressants work? (First Take) — LessWrong

Published on January 12, 2025 5:11 PM GMTI've been researching the controversy over whether antidepressants truly...

-

AI Developed: A Novel Idea for Harnessing Magnetic Reconnection as an Energy Source — LessWrong

Published on January 12, 2025 5:11 PM GMTIntroductionMagnetic reconnection—the sudden rearrangement of magnetic field lines—drives dramatic...

-

Building AI Research Fleets — LessWrong

Published on January 12, 2025 6:23 PM GMTFrom AI scientist to AI research fleetResearch automation is...

-

Near term discussions need something smaller and more concrete than AGI — LessWrong

Published on January 11, 2025 6:24 PM GMTMotivationI want a more concrete concept than AGI[1] to talk...

-

A proposal for iterated interpretability with known-interpretable narrow AIs — LessWrong

Published on January 11, 2025 2:43 PM GMTI decided, as a challenge to myself, to spend...

-

We need a universal definition of 'agency' and related words — LessWrong

Published on January 11, 2025 3:22 AM GMTAnd by "we" I mean "I". I'm the one...

-

AI for medical care for hard-to-treat diseases? — LessWrong

Published on January 10, 2025 11:55 PM GMTWith LLM-based AI passing benchmarks that would challenge people...

-

Beliefs and state of mind into 2025 — LessWrong

Published on January 10, 2025 10:07 PM GMTThis post is to record the state of my...

-

Is AI Alignment Enough? — LessWrong

Published on January 10, 2025 6:57 PM GMTVirtually everyone I see in the AI safety community...

-

Recommendations for Technical AI Safety Research Directions — LessWrong

Published on January 10, 2025 7:34 PM GMTAnthropic’s Alignment Science team conducts technical research aimed at...

-

What are some scenarios where an aligned AGI actually helps humanity, but many/most people don't like it? — LessWrong

Published on January 10, 2025 6:13 PM GMTOne can call it "deceptive misalignment": the aligned AGI...

-

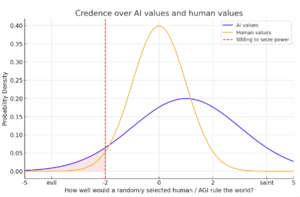

Human takeover might be worse than AI takeover — LessWrong

Published on January 10, 2025 4:53 PM GMTEpistemic status -- sharing rough notes on an important...

-

The Alignment Mapping Program: Forging Independent Thinkers in AI Safety - A Pilot Retrospective — LessWrong

Published on January 10, 2025 4:22 PM GMTThe Alignment Mapping Program: Forging Independent Thinkers in AI...

-

Discursive Warfare and Faction Formation — LessWrong

Published on January 9, 2025 4:47 PM GMTResponse to Discursive Games, Discursive WarfareThe discursive distortions you...

-

Can we rescue Effective Altruism? — LessWrong

Published on January 9, 2025 4:40 PM GMTLast year Timothy Telleen-Lawton and I recorded a podcast...

-

AI #98: World Ends With Six Word Story — LessWrong

Published on January 9, 2025 4:30 PM GMTThe world is kind of on fire. The world...

-

Many Worlds and the Problems of Evil — LessWrong

Published on January 9, 2025 4:10 PM GMTSummary: The Many-Worlds interpretation of quantum mechanics helps us...

-

PIBBSS Fellowship 2025: Bounties and Cooperative AI Track Announcement — LessWrong

Published on January 9, 2025 2:23 PM GMTWe're excited to announce that the PIBBSS Fellowship 2025 now...

-

Thoughts on the In-Context Scheming AI Experiment — LessWrong

Published on January 9, 2025 2:19 AM GMTThese are thoughts in response to the paper "Frontier...

-

A Systematic Approach to AI Risk Analysis Through Cognitive Capabilities — LessWrong

Published on January 9, 2025 12:18 AM GMTA Systematic Approach to AI Risk Analysis Through Cognitive...

-

Aristocracy and Hostage Capital — LessWrong

Published on January 8, 2025 7:38 PM GMTThere’s a conventional narrative by which the pre-20th century...

-

What is the most impressive game LLMs can play well? — LessWrong

Published on January 8, 2025 7:38 PM GMTEpistemic status: This is an off-the-cuff question.~5 years ago...